Achieving 90% Recall with a Deep Neural Network: How SMOTE and ModelCheckpoint Callbacks Improved Results on a Skewed Classification Problem

"Hey, I started a Data Science Project" series

Hey, I am back.. It has been a busy couple of months, but I am glad to be working on new datasets again.

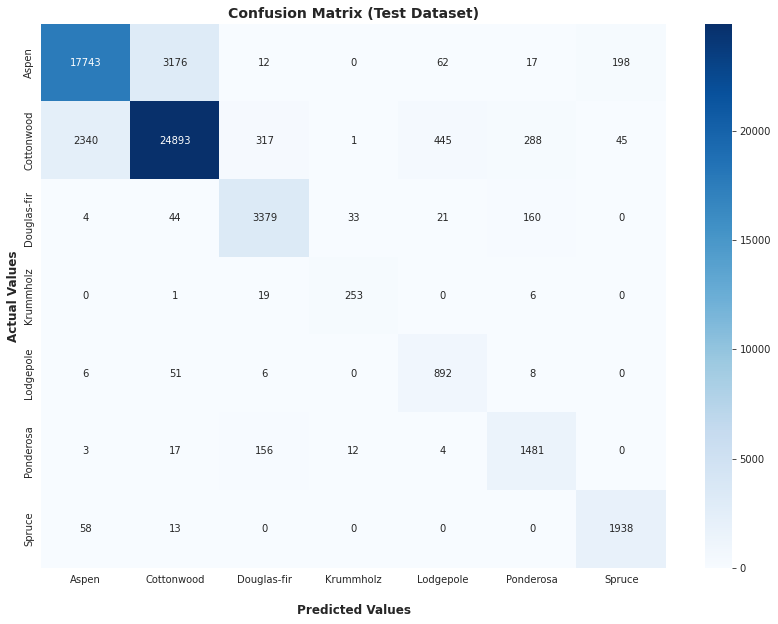

In this project series, I trained a deep neural network to classify 1.2 million cartographic data points into 7 different classes. On the test data, I achieved an average recall score of 90.4%. The neural network, which has 8,072 parameters and 190 neurons, consists of a single hidden layer (128 neurons) connected to a final 8-way softmax. The dataset consists of 581,012 cartographic data points (pre-data augmentation) belonging to 7 cover types. These were derived from data obtained from the US Geological Survey and US Forest Service (USFS) Region 2 Resource Information System. The 7 cover types are:

Spruce/Fir

Lodgepole Pine

Ponderosa Pine

Cottonwood/Willow

Aspen

Douglas-fir

Krummholz

Here are highlights of how I was able to achieve this result and handle general execution:

I addressed the skewed class distribution problem in the dataset using the Synthetic Minority Over-sampling Technique (SMOTE).

I applied the Keras ModelCheckpoint callback during training to capture the best-optimized model based on validation loss.

I handled some of the reproducibility issues inherent in the use of neural networks by applying the Central Limit Theorem.

The entire code for this project is hosted on my GitHub below: